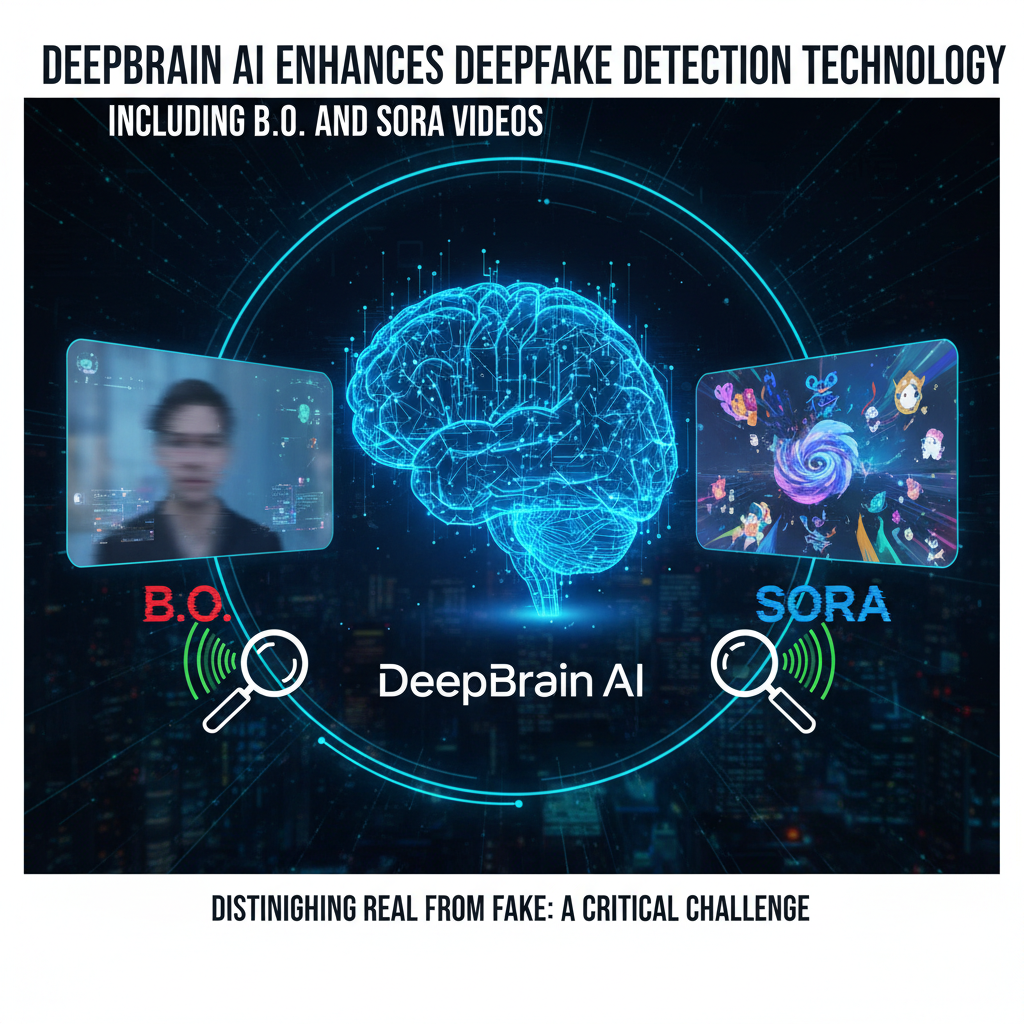

DeepBrain AI Enhances Deepfake Detection Technology, Including B.O. and Sora Videos

As generative artificial intelligence (AI) technology transforms the landscape of video production, simultaneously, distinguishing between what is real and what is fake has become one of the most critical challenges for our society. In resp...

As generative artificial intelligence (AI) technology transforms the landscape of video production, simultaneously, distinguishing between what is real and what is fake has become one of the most critical challenges for our society. In response to these demands of the era, DeepBrain AI, a company specializing in generative AI, has further advanced its deepfake detection solution and is now committed to ensuring the reliability of digital video content.

The sophistication of generative AI, which can swiftly create realistic videos from just text or images, has reached an astonishing level. It is becoming increasingly difficult to determine the authenticity of images and videos produced by cutting-edge video generation platforms like Google's 'Veo' and OpenAI's 'Sora'. DeepBrain AI has targeted this very issue, and as part of the 'Cultural Technology R&D' project overseen by the Ministry of Culture, Sports and Tourism and the Korea Creative Content Agency, it has significantly expanded the scope of its existing deepfake detection technology to include generative AI-based content.

DeepBrain AI's deepfake detection solution, 'AI Detector', is already actively utilized in various industries such as public institutions, finance, and education, proving its efficacy. The core of this solution lies in its unique technological capability to precisely analyze even minute pixel differences to determine whether content is a deepfake. The enhanced AI Detector can now accurately identify the manipulation of content created with the latest generative AI platforms like Google Veo and OpenAI Sora.

Furthermore, this advanced functionality is also provided as an API (Application Programming Interface) service. This means that external companies or institutions can easily utilize DeepBrain AI's powerful verification technology without the need for complex system development, significantly enhancing technological accessibility.

Jang Se-young, CEO of DeepBrain AI, stated, "The rapid spread of generative AI technology has made distinguishing the authenticity of content a critical challenge for society as a whole." He further expressed his ambition that through this technological advancement, DeepBrain AI would "actively contribute to identifying the manipulation of videos produced by generative AI and building an environment where AI content can be used transparently."

DeepBrain AI plans to continue taking the lead in resolving social issues caused by synthetic videos and manipulated content, and will continuously strengthen its related verification systems. This will be regarded not merely as technological development, but as a crucial effort to uphold trust and transparency in the digital age.

What's Your Reaction?

Like

0

Like

0

Dislike

0

Dislike

0

Love

0

Love

0

Funny

0

Funny

0

Angry

0

Angry

0

Sad

0

Sad

0

Wow

0

Wow

0

![[This Month's Tip] Elice Group, Kindof, Leviosa AI: Key Updates](https://www.startupkorea.kr/uploads/images/202512/10_dd1f4c2a38634.png)